Automatic Storage Management Fast Mirror Resync in Oracle 11g:

The main purpose of Introducing ASM was to provide a batter Data Redundancy to the database and to discourage RAID at hardware level. ASM provides three types of redundancy.

1. Normal Redundancy

2. High Redundancy

3. External Redundancy.

In normal Redundancy one copy of data is maintained, High Redundancy maintains two copies of data into another disks and External leave it to external sources.

In Oracle 10g, whenever a disk goes offline i.e. not accessible to Automatic Storage Management for data reading/writing purpose. It drops the disk from disk group and as per redundancy start making new copies of extents to other disks and this is a very expensive process for I/O operations.

Suppose the disk failure was a temporary issue and got fixed in few hours and disk is back now, so ASM will again start rebalanced the data using this disk. In conclusion, temporary failure of disk needs two rebalance operations first when disk goes offline and second when disk is online.

In Oracle 11g, this two time balancing is reduced by using two things Disk_repair_time and keeping track of changed extents during disk offline time. Every disk group has a default disk_repair_time (3.6 Hours) for which it can tolerate disk unavailability means By default, Oracle ASM drops a disk in 3.6 hours after it is taken offline.

The time can be specified in units of minutes (m or M) or hours (h orH), If you omit the unit, then the default unit is hours

This parameter can modify using below command.

You can use ALTER DISKGROUP to set the DISK_REPAIR_TIME attribute to a specified hour or minute value, such as 4.5 hours or 270 minutes. For example:

ALTER DISKGROUP data SET ATTRIBUTE 'disk_repair_time' = '4.5h'

ALTER DISKGROUP data SET ATTRIBUTE 'disk_repair_time' = '270m'

Applicable to normal and high redundancy disk groups .Any problems that make a failure group temporarily unavailable are considered transient failures that can be recovered by the Oracle ASM fast mirror resync feature. For example, transient failures can be caused by disk path malfunctions, such as cable failures, host bus adapter failures, controller failures, or disk power supply interruptions.

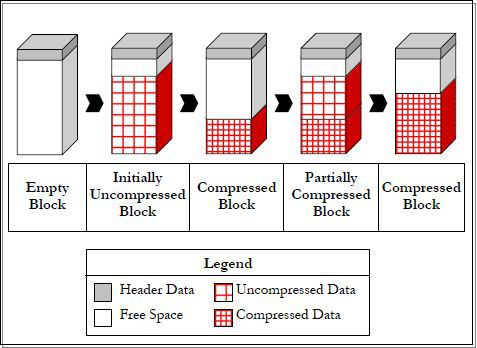

As the disk goes offline, Automatic Storage Management start keeping track of changed extents using redundant copy of disk. Automatic storage Management will only write changed data into disk when this will be online. This wait will continue until disk_repair_time is over, if disk comes back before this time ASM will only update changed data into disk.

If disk doesn’t come back until disk_repair_time, it will drop the disk from disk group and start rebalance operation.

Using this method, we can choose a time by which Automatic Storage Management will wait disk to come back and can avoid disk rebalanced operations done in previous version.

The REPAIR_TIMER column of V$ASM_DISK shows the amount of time left (in seconds) before an offline disk is dropped. After the specified time has elapsed, Oracle ASM drops the disk.

Query to find disk_repair_time for a disk group:

SQL> select name,value,group_number from v$asm_attribute where name like 'disk_repair%';

NAME VALUE GROUP_NUMBER

-------------------- ------------ ---------------------------

disk_repair_time 3.6h 1

disk_repair_time 3.6h 2

After you repair the disk, run the SQL statement ALTER DISKGROUP ONLINE DISK. This statement brings a repaired disk group back online to enable writes so that no new writes are missed. This statement also starts a procedure to copy of all of the extents that are marked as stale on their redundant copies.

The following example takes disk DATA_001 offline and drops it after five minutes.

ALTER DISKGROUP data OFFLINE DISK DATA_001 DROP AFTER 5m;

The next example takes the disk DATA_001 offline and drops it after the time period designated by DISK_REPAIR_TIME elapses:

ALTER DISKGROUP data OFFLINE DISK DATA_001;

This example takes all of the disks in failure group FG2 offline and drops them after the time period designated by DISK_REPAIR_TIME elapses. If you used a DROP AFTER clause, then the disks would be dropped after the specified time:

ALTER DISKGROUP data OFFLINE DISKS IN FAILGROUP FG2;

The next example brings all of the disks in failure group FG2 online:

ALTER DISKGROUP data ONLINE DISKS IN FAILGROUP FG2;

This example brings only disk DATA_001 online:

ALTER DISKGROUP data ONLINE DISK DATA_001;

This example brings all of the disks in disk group DATA online:

ALTER DISKGROUP data ONLINE ALL;

Querying the V$ASM_OPERATION view while you run ALTER DISKGROUP ONLINE statements displays the name and state of the current operation that you are performing. For example, the following SQL query shows values in the PASS column during an online operation.

SQL> SELECT GROUP_NUMBER, PASS, STATE FROM V$ASM_OPERATION;

GROUP_NUMBER PASS STAT

------------------- -------------- ---------------------

1 RESYNC RUN

1 REBALANCE WAIT

1 OMPACT WAIT

An offline operation does not generate a display in a V$ASM_OPERATION view query.

You can set the FAILGROUP_REPAIR_TIME and CONTENT.TYPE disk group attributes. The FAILGROUP_REPAIR_TIME disk group attribute specifies a default repair time for the failure groups in the disk group. The CONTENT.TYPE disk group attribute specifies the type of data expected to be stored in a disk group. You can set these attributes with ASMCA, ASMCMD mkdg, or SQL CREATE and ALTER DISKGROUP statements. For information about disk group attributes.

The ASMCMD lsop command shows the resync time estimate. There are separate rows in the V$ASM_OPERATION table for different phases of rebalance: disk resync, rebalance, and data compaction.

The ASMCMD online command has a power option to specify the power for the online operation. The SQL ALTER DISKGROUP REPLACE DISK statement also has the power option.

The ASMCMD chdg command provides the replace option in addition to the add and drop tags. The ASMCMD mkdg command has an additional time parameter (-t) to specify the time to offline a failure group.

Practical Approach:

I will create a transient disk path failure, fix the failure and finally put the failed disks back online. The objective is to setup “ASM Fast Mirror Resync” and monitor it before and after a transient disk path failure. In this scenario, I have used 11gR2 Oracle Database and 11gR2 ASM instance on an RHEL5.7. If you would like to replay this scenario yourself then create or find a sand box, in other words, a box which does not have any significant importance to others. Otherwise some tasks performed here may cause terrible consequences that you would never want it to happen to you.

Following tasks will be performed in the order given.

1.Perform prerequisite actions to use ASM fast mirror resync

2.Simulate a transient failure

3.Fix the transient failure

4.Monitor the Fast Mirror Reync in action

All the scripts used in the scenario are listed at the end of this post.

1: Perform prerequisite actions to use ASM fast mirror resync :

As mentioned above we need to use ASM redundancy (not external redundancy). Create a disk group having normal redundancy and a tablespace having datafile using this disk group

[oracle@PawASM ~]$ . oraenv

ORACLE_SID = [MYASM] ? +ASM

The Oracle base for ORACLE_HOME=/u01/app/oracle/product/11.2.0/grid is /u01/app/oracle

[oracle@PawASM ~]$sqlplus / as sysasm

SQL> create diskgroup PAWDG normal redundancy

failgroup PAWDG_FG1 disk

'/dev/oracleasm/disks/DISK4',

'/dev/oracleasm/disks/DISK5',

'/dev/oracleasm/disks/DISK6'

failgroup PAWDG_FG2 disk

'/dev/oracleasm/disks/DISK7',

'/dev/oracleasm/disks/DISK8',

'/dev/oracleasm/disks/DISK9' ;

SQL> select name,header_status,mount_status,state,path from V$asm_disk;

NAME HEADER_STATU MOUNT_S STATE PATH

-------------- ------------ ------- -------- --------------------------------

FORMER CLOSED NORMAL /dev/oracleasm/disks/DISK10

DATA_0000 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK1

DATA_0001 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK2

DATA_0002 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK3

PAWDG_0000 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK4

PAWDG_0001 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK5

PAWDG_0002 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK6

PAWDG_0003 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK7

PAWDG_0004 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK8

PAWDG_0005 MEMBER CACHED NORMAL /dev/oracleasm/disks/DISK9

[oracle@PawASM ~]$ . oraenv

ORACLE_SID = [MYASM] ? +ASM

The Oracle base for ORACLE_HOME=/u01/app/oracle/product/11.2.0/grid is /u01/app/oracle

[oracle@PawASM ~]$ asmcmd

ASMCMD> lsdg

State Type Rebal Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED NORMAL N 512 4096 1048576 11460 5030 2144 1443 0 N DATA/

MOUNTED NORMAL N 512 4096 1048576 22920 22810 20 11395 0 N PAWDG/

ASMCMD> exit

[oracle@PawASM ~]$ . oraenv

ORACLE_SID = [+ASM] ? MYASM

The Oracle base for ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1 is /u01/app/oracle

[oracle@PawASM ~]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.1.0 Production on Sun Jan 31 14:33:05 2016

Copyright (c) 1982, 2009, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production

With the Partitioning, Automatic Storage Management, OLAP, Data Mining

and Real Application Testing options

SQL> create tablespace TS_ASMSYNC datafile '+PAWDG/TS_ASMSYNC_01.dbf' size 128M;

Tablespace created.

ASM disk group attributes COMPATIBLE.RDBMS and COMPATIBLE.ASM must be set to the minimum software release of 11.1. Therefore a 10g database can not use this feature. Setup Fast Mirror Sync as below. Setting up DISK_REPAIR_TIME is optional. It has a default value of 3.6h

[oracle@PawASM ~]$ sqlplus / as sysasm

SQL*Plus: Release 11.2.0.1.0 Production on Sun Jan 31 14:32:46 2016

Copyright (c) 1982, 2009, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production

With the Automatic Storage Management option

SQL> alter diskgroup PAWDG set attribute 'compatible.asm' = '11.2.0.0.0';

Diskgroup altered.

SQL> alter diskgroup PAWDG set attribute 'compatible.rdbms' = '11.2.0.0.0';

Diskgroup altered.

SQL> alter diskgroup PAWDG set attribute 'disk_repair_time'='5h';

Diskgroup altered.

List the new attributes we have just updated using the script dg_attribs.sql

SQL> @dg_attribs.sql;

dg name attrib name VALUE READ_ON

-------------------- ------------------------------ -------------------- ---

PAWDG disk_repair_time 5h N

PAWDG au_size 1048576 Y

PAWDG sector_size 512 Y

PAWDG access_control.umask 066 N

PAWDG compatible.rdbms 11.2.0.0.0 N

PAWDG cell.smart_scan_capable FALSE N

PAWDG access_control.enabled FALSE N

PAWDG compatible.asm 11.2.0.0.0 N

8 rows selected.

2: Simulate a transient failure:

Below, as it can be seen from path of the ASM disks, I have used ASM Disks as ASM storage. Each raw device corresponds to a disk in a disk group. Since we have the ability to change file access privileges of raw devices, I can create a transient disk path failure by changing read-write permission of the raw devices.

Below is the status of the ASM disks just before the failure.

SQL> @chkasmdiskstat.sql;

DISK_NUMBER NAME PATH MOUNT_S HEADER_STATU MODE_ST STATE FAILGROUP REPAIR_TIMER

----------- ---------- --------------------------- ------- ------------ ------- -------- ---------- ------------

0 DATA_0000 /dev/oracleasm/disks/DISK1 CACHED MEMBER ONLINE NORMAL DATA_0000 0

1 DATA_0001 /dev/oracleasm/disks/DISK2 CACHED MEMBER ONLINE NORMAL DATA_0001 0

2 DATA_0002 /dev/oracleasm/disks/DISK3 CACHED MEMBER ONLINE NORMAL DATA_0002 0

0 PAWDG_0000 /dev/oracleasm/disks/DISK4 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

1 PAWDG_0001 /dev/oracleasm/disks/DISK5 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

2 PAWDG_0002 /dev/oracleasm/disks/DISK6 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

3 PAWDG_0003 /dev/oracleasm/disks/DISK7 CACHED MEMBER ONLINE NORMAL PAWDG_FG2 0

4 PAWDG_0004 /dev/oracleasm/disks/DISK8 CACHED MEMBER ONLINE NORMAL PAWDG_FG2 0

5 PAWDG_0005 /dev/oracleasm/disks/DISK9 CACHED MEMBER ONLINE NORMAL PAWDG_FG2 0

9 /dev/oracleasm/disks/DISK10 CLOSED FORMER ONLINE NORMAL 0

10 rows selected.

Below I am simulating a transient disk path failure by modifying the read-write permissions of disks to 000. From this point on, ASM can not access to these disks to read from or to write.

[root@PawASM ~]# chmod 000 /dev/oracleasm/disks/DISK7 /dev/oracleasm/disks/DISK8 /dev/oracleasm/disks/DISK9

At this stage it is better to execute a workload to make some modifications in the existing redundant extent copies in the disk failure group PAWDG_FG1. It will also make ASM to realize that some disks are missing while it tries to mirror the redundant copies to the extents of failed disks.

SQL> @workload.sql;

Connected.

User created.

Grant succeeded.

User altered.

Connected.

drop table table_workload

*

ERROR at line 1:

ORA-00942: table or view does not exist

Table created.

PL/SQL procedure successfully completed.

Commit complete.

COUNT(1)

----------

1048576

SQL> exit

Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production With the Automatic Storage Management option

[oracle@PawASM ~]$

As it can be seen below, ASM can not access to any disk in failure group PAWDG_FG2 and it has taken these disks offline. Also disk repair timer has started. Besides this information, you can see further details regarding consequences of this failure in alert.log of ASM if you want.

[oracle@PawASM ~]$ sqlplus / as sysasm

SQL*Plus: Release 11.2.0.1.0 Production on Sun Jan 31 15:01:20 2016

Copyright (c) 1982, 2009, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 - 64bit Production

With the Automatic Storage Management option

SQL> @chkasmdiskstat.sql;

DISK_NUMBER NAME PATH MOUNT_S HEADER_STATU MODE_ST STATE FAILGROUP REPAIR_TIMER

----------- ---------- --------------------------- ------- ------------ ------- -------- ---------- ------------

0 DATA_0000 /dev/oracleasm/disks/DISK1 CACHED MEMBER ONLINE NORMAL DATA_0000 0

1 DATA_0001 /dev/oracleasm/disks/DISK2 CACHED MEMBER ONLINE NORMAL DATA_0001 0

2 DATA_0002 /dev/oracleasm/disks/DISK3 CACHED MEMBER ONLINE NORMAL DATA_0002 0

0 PAWDG_0000 /dev/oracleasm/disks/DISK4 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

1 PAWDG_0001 /dev/oracleasm/disks/DISK5 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

2 PAWDG_0002 /dev/oracleasm/disks/DISK6 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

3 PAWDG_0003 MISSING UNKNOWN OFFLINE NORMAL PAWDG_FG2 18000

4 PAWDG_0004 MISSING UNKNOWN OFFLINE NORMAL PAWDG_FG2 18000

5 PAWDG_0005 MISSING UNKNOWN OFFLINE NORMAL PAWDG_FG2 18000

0 /dev/oracleasm/disks/DISK7 CLOSED MEMBER ONLINE NORMAL 0

1 /dev/oracleasm/disks/DISK8 CLOSED MEMBER ONLINE NORMAL 0

2 /dev/oracleasm/disks/DISK9 CLOSED MEMBER ONLINE NORMAL 0

9 /dev/oracleasm/disks/DISK10 CLOSED FORMER ONLINE NORMAL 0

13 rows selected.

3: Fix the transient failure :

The fix is simply to revert the raw device read-write permissions to 660. This makes raw devices accessible by ASM again.

[root@PawASM ~]# chmod 000 /dev/oracleasm/disks/DISK7 /dev/oracleasm/disks/DISK8 /dev/oracleasm/disks/DISK9

Now it is time to take the disks online.

SQL> alter diskgroup PAWDG online disks in failgroup PAWDG_FG2;

Diskgroup altered.

4: Monitor the Fast Mirror Resync in action:

Below it can be seen that stale extents of the disks taken online are being resynchronized. Look at the devices below having SYNCING value in the the column MODE_STATUS

SQL> @chkasmdiskstat.sql;

DISK_NUMBER NAME PATH MOUNT_S HEADER_STATU MODE_ST STATE FAILGROUP REPAIR_TIMER

----------- ---------- --------------------------- ------- ------------ ------- -------- ---------- ------------

0 DATA_0000 /dev/oracleasm/disks/DISK1 CACHED MEMBER ONLINE NORMAL DATA_0000 0

1 DATA_0001 /dev/oracleasm/disks/DISK2 CACHED MEMBER ONLINE NORMAL DATA_0001 0

2 DATA_0002 /dev/oracleasm/disks/DISK3 CACHED MEMBER ONLINE NORMAL DATA_0002 0

0 PAWDG_0000 /dev/oracleasm/disks/DISK4 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

1 PAWDG_0001 /dev/oracleasm/disks/DISK5 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

2 PAWDG_0002 /dev/oracleasm/disks/DISK6 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

3 PAWDG_0003 /dev/oracleasm/disks/DISK7 CACHED MEMBER SYNCING NORMAL PAWDG_FG2 17816

4 PAWDG_0004 /dev/oracleasm/disks/DISK8 CACHED MEMBER SYNCING NORMAL PAWDG_FG2 17816

5 PAWDG_0005 /dev/oracleasm/disks/DISK9 CACHED MEMBER SYNCING NORMAL PAWDG_FG2 17816

9 /dev/oracleasm/disks/DISK10 CLOSED FORMER ONLINE NORMAL 0

10 rows selected.

Also syncing operation is represented with a status ONLINE in the column OPERATION of v$ASM_OPERATION view. See below.

SQL> @chkasmoper.sql

GROUP_NUMBER OPERA STAT

------------ ----- ----

1 ONLIN RUN

After syncing operation completed, redundancy has been restored and disks are online again. See below.

SQL> @chkasmdiskstat.sql;

DISK_NUMBER NAME PATH MOUNT_S HEADER_STATU MODE_ST STATE FAILGROUP REPAIR_TIMER

----------- ---------- --------------------------- ------- ------------ ------- -------- ---------- ------------

0 DATA_0000 /dev/oracleasm/disks/DISK1 CACHED MEMBER ONLINE NORMAL DATA_0000 0

1 DATA_0001 /dev/oracleasm/disks/DISK2 CACHED MEMBER ONLINE NORMAL DATA_0001 0

2 DATA_0002 /dev/oracleasm/disks/DISK3 CACHED MEMBER ONLINE NORMAL DATA_0002 0

0 PAWDG_0000 /dev/oracleasm/disks/DISK4 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

1 PAWDG_0001 /dev/oracleasm/disks/DISK5 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

2 PAWDG_0002 /dev/oracleasm/disks/DISK6 CACHED MEMBER ONLINE NORMAL PAWDG_FG1 0

3 PAWDG_0003 /dev/oracleasm/disks/DISK7 CACHED MEMBER ONLINE NORMAL PAWDG_FG2 0

4 PAWDG_0004 /dev/oracleasm/disks/DISK8 CACHED MEMBER ONLINE NORMAL PAWDG_FG2 0

5 PAWDG_0005 /dev/oracleasm/disks/DISK9 CACHED MEMBER ONLINE NORMAL PAWDG_FG2 0

9 /dev/oracleasm/disks/DISK10 CLOSED FORMER ONLINE NORMAL 0

10 rows selected.

Click on Link to download Automatic Storage Management Fast Mirror Resync in Oracle 11g-Scripts used.

Password for to Open document is Airydba@159

Thank you for Reading…This is AIRY…Enjoy