CONVERT NON ASM ( File System ) SINGLE INSTANCE DATABASE TO RAC DATABASE USING RCONFIG: Oracle 11g R2 RAC:

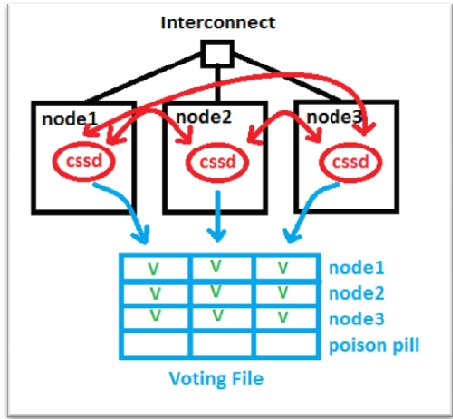

For converting standalone to RAC database, both the environments should be running on the same operating system and using the same oracle release. Oracle supports the following methods to convert a single-instance database to an RAC database:

- DBCA

- Oracle Enterprise Manager (grid control)

- RCONFIG

- Manual method

With my post, I will demonstrate you to convert non ASM single instance database to RAC database by using the rconfig command-line tool. During the conversion, rconfig performs the following steps automatically:

- Migrating the database to ASM, if specified

- Creating RAC database instances on all specified nodes in the cluster.

- Configuring the Listener and Net Service entries.

- Registering services with CRS.

- Starting up the instances and listener on all nodes.

In Oracle 11g R2, a single-instance database can either be converted to an administrator-managed cluster database or a policy-managed cluster database.

When you navigate through the $ORACLE_HOME/assistants/rconfig/sampleXMLS, you will find two sample XML input files.

- ConvertToRAC_AdminManaged.xml

- ConvertToRAC_PolicyManaged.xml

While converting a single-instance database, with file system storage, to an RAC database with Automatic Storage Management (ASM), rconfig invokes RMAN internally to back up the database to proceed with converting non-ASM to ASM. Therefore, to make backup faster it is better to increase the PARALLELISM in configuration settings, which will use more RMAN channels in the RMAN on the local node and will make backup run faster and eventually reduces the conversion duration. For example, you may configure the following in the RMAN settings of pawdb database on the local node.

RMAN> CONFIGURE DEVICE TYPE DISK PARALLELISM 3;

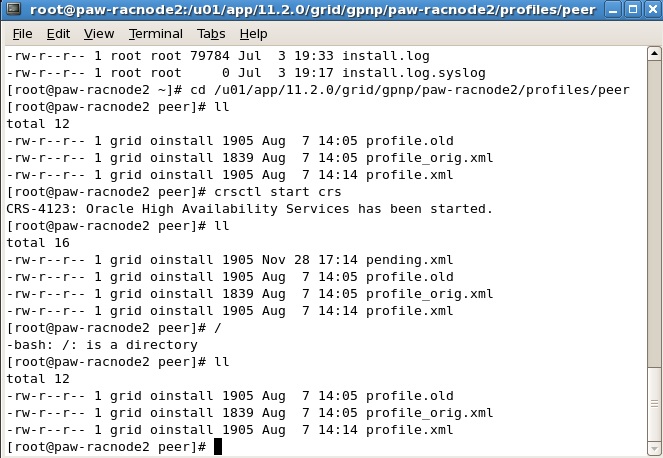

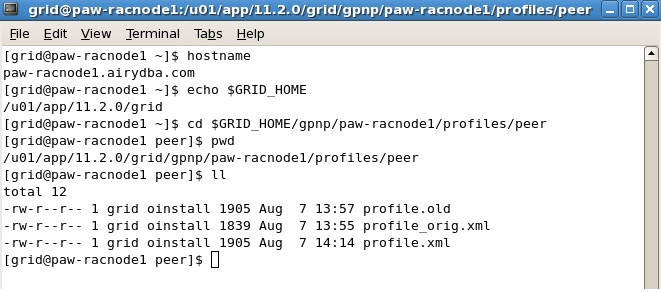

CURRENT SCENARIO:

- RAC 2 Node Cluster setup

- Names of nodes : paw-racnode1, paw-racnode2

- Name of Multi instance RAC database with ASM storage : racdb

- Name of single instance database with file system storage : pawdb

- Source Oracle home : /u01/app/oracle/product/11.2.0/db_1

- Target Oracle home : /u01/app/oracle/product/11.2.0/db_1

OBJECTIVE:

- Convert pawdb single instance Non ASM database to an Admin managed RAC database running on two nodes paw-racnode1 and paw-racnode2.

- Change storage from File system to ASM with

- Data files on +PAWDB_DATA diskgroup

- Flash recovery area on +FRA diskgroup

IMPLEMENTATION:

– Created a single Instance File system ( Non ASM ) database : pawdb

[oracle@paw-racnode1 ~ ]$ srvctl config database -d pawdb

Database unique name: pawdb

Database name: pawdb

Oracle home: /u01/app/oracle/product/11.2.0/db_1

Oracle user: oracle

Spfile:

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools: pawdb

Database instances: pawdb

Disk Groups:

Services:

Database is administrator managed

[grid@paw-racnode1 ~]$ srvctl status database -d pawdb

Instance pawdb is running on node paw-racnode1

[oracle@paw-racnode1 ~]$ . oraenv

ORACLE_SID = [orcl] ? pawdb

The Oracle base for ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1 is /u01/app/oracle

[oracle@paw-racnode1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.1.0 Production on Sat Dec 10 16:33:52 2016

Copyright (c) 1982, 2009, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 – 64bit Production

With the Partitioning, Real Application Clusters, OLAP, Data Mining

and Real Application Testing options

SQL> select name from v$datafile;

NAME

————————————————————————–u01/app/oracle/oradata/pawdb/system01.dbf

/u01/app/oracle/oradata/pawdb/sysaux01.dbf

/u01/app/oracle/oradata/pawdb/undotbs01.dbf

/u01/app/oracle/oradata/pawdb/users01.dbf

/u01/app/oracle/oradata/pawdb/example01.dbf

– Copy ConvertToRAC_AdminManaged.xml to another file convert.xml

[oracle@paw-racnode1 ~]$ cd $ORACLE_HOME/assistants/rconfig/sampleXMLs

[oracle@paw-racnode1 sampleXMLs]$ cp ConvertToRAC_AdminManaged.xml convert.xml

[oracle@paw-racnode1 sampleXMLs]$ cat convert.xml

<?xml version=”1.0″ encoding=”UTF-8″?>

<n:RConfig xmlns:n=”http://www.oracle.com/rconfig”

xmlns:xsi=”http://www.w3.org/2001/XMLSchema-instance”

xsi:schemaLocation=”http://www.oracle.com/rconfig”>

<n:ConvertToRAC>

<!– Verify does a precheck to ensure all pre-requisites are met, before the conversion is attempted. Allowable values are: YES|NO|ONLY –>

<n:Convert verify=”YES”>

<!–Specify current OracleHome of non-rac database for SourceDBHome –>

<n:SourceDBHome>/oracle/product/11.2.0/db_1</n:SourceDBHome>

<!–Specify OracleHome where the rac database should be configured. It can be same as SourceDBHome –>

<n:TargetDBHome>/oracle/product/11.2.0/db_1</n:TargetDBHome>

<!–Specify SID of non-rac database and credential. User with sysdba role is required to perform conversion –>

<n:SourceDBInfo SID=”orcl”>

<n:Credentials>

<n:User>sys</n:User>

<n:Password>oracle</n:Password>

<n:Role>sysdba</n:Role>

</n:Credentials>

</n:SourceDBInfo>

<!–Specify the list of nodes that should have rac instances running for the Admin Managed Cluster Database. LocalNode should be the first node in this nodelist. –>

<n:NodeList>

<n:Node name=”node1″/>

<n:Node name=”node2″/>

</n:NodeList>

<!–Instance Prefix tag is optional starting with 11.2. If left empty, it is derived from db_unique_name.–>

<n:InstancePrefix>sales</n:InstancePrefix>

<!– Listener details are no longer needed starting 11.2. Database is registered with default listener and SCAN listener running from Oracle Grid Infrastructure home. –>

<!–Specify the type of storage to be used by rac database. Allowable values are CFS|ASM. The non-rac database should have same storage type. ASM credentials are no needed for conversion. –>

<n:SharedStorage type=”ASM”>

<!–Specify Database Area Location to be configured for rac database.If this field is left empty, current storage will be used for rac database. For CFS, this field will have directory path. –>

<n:TargetDatabaseArea>+ASMDG</n:TargetDatabaseArea>

<!–Specify Flash Recovery Area to be configured for rac database. If this field is left empty, current recovery area of non-rac database will be configured for rac database. If current database is not using recovery Area, the resulting rac database will not have a recovery area. –>

<n:TargetFlashRecoveryArea>+ASMDG</n:TargetFlashRecoveryArea>

</n:SharedStorage>

– Edit convert.xml file and make following changes :

- Verify does a precheck to ensure all pre-requisites are met, before the conversion is attempted. Allowable values are: YES|NO|ONLY : In my case , I have Taken : YES

- Specify current OracleHome of non-rac database for SourceDBHome : In my case , I have Taken : /u01/app/oracle/product/11.2.0/db_1

- Specify OracleHome where the rac database should be configured. It can be same as Source DBHome. : In my case , I have Taken : /u01/app/oracle/product/11.2.0/db_1

- Specify SID of non-rac database and credential. User with sysdba role is required to perform conversion. : In my case : database is pawdb and my sysdba user is sys and sys user password is sys and role is

- Specify the list of nodes that should have rac instances running for the Admin Managed Cluster Database. LocalNode should be the first node in this nodelist. : In my case : paw-racnode1, paw-racnode2

- Instance Prefix tag is optional starting with 11.2. If left empty, it is derived from db_unique_name. : In my case , I have Taken : pawdb ( instance names will appear pawdb1, pawdb2)

- Listener details are no longer needed starting 11.2. Database is registered with default listener and SCAN listener running from Oracle Grid Infrastructure home. : Check local and scan listeners are up and running

- Specify the type of storage to be used by rac database. Allowable values are CFS|ASM. The non-rac database should have same storage type. ASM credentials are no needed for conversion. : In my case: ASM ( Because my storage type is ASM )

- Specify Database Area Location to be configured for rac database. If this field is left empty, current storage will be used for rac database. For CFS, this field will have directory path. : In my case , I have Taken : +PAWDB_DATA ( I have created a separate diskgroup PAWDB_DATA to store datafiles of pawdb database on ASM )

- Specify Flash Recovery Area to be configured for rac database. If this field is left empty, current recovery area of non-rac database will be configured for rac database. If current database is not using recovery Area, the resulting rac database will not have a recovery area. : In my case , I have Taken : +FRA

– Modified:

[oracle@paw-racnode1 sampleXMLs]$ vi convert.xml

<?xml version=”1.0″ encoding=”UTF-8″?>

<n:RConfig xmlns:n=”http://www.oracle.com/rconfig”

xmlns:xsi=”http://www.w3.org/2001/XMLSchema-instance”

xsi:schemaLocation=”http://www.oracle.com/rconfig”>

<n:ConvertToRAC>

<!– Verify does a precheck to ensure all pre-requisites are met, before the conversion is attempted. Allowable values are: YES|NO|ONLY –>

<n:Convert verify=”YES“>

<!–Specify current OracleHome of non-rac database for SourceDBHome –>

<n:SourceDBHome>/u01/app/oracle/product/11.2.0/db_1</n:SourceDBHome>

<!–Specify OracleHome where the rac database should be configured. It can be same as SourceDBHome –>

<n:TargetDBHome>/u01/app/oracle/product/11.2.0/db_1</n:TargetDBHome>

<!–Specify SID of non-rac database and credential. User with sysdba role is required to perform conversion –>

<n:SourceDBInfo SID=”pawdb“>

<n:Credentials>

<n:User>sys</n:User>

<n:Password>sys</n:Password>

<n:Role>sysdba</n:Role>

</n:Credentials>

</n:SourceDBInfo>

<!–Specify the list of nodes that should have rac instances running for the Admin Managed Cluster Database. LocalNode should be the first node in this nodelist. –>

<n:NodeList>

<n:Node name=”paw-racnode1“/>

<n:Node name=”paw-racnode2“/>

</n:NodeList>

<!–Instance Prefix tag is optional starting with 11.2. If left empty, it is derived from db_unique_name.–>

<n:InstancePrefix>pawdb</n:InstancePrefix>

<!– Listener details are no longer needed starting 11.2. Database is registered with default listener and SCAN listener running from Oracle Grid Infrastructure home. –>

<!–Specify the type of storage to be used by rac database. Allowable values are CFS|ASM. The non-rac database should have same storage type. ASM credentials are no needed for conversion. –>

<n:SharedStorage type=”ASM“>

<!–Specify Database Area Location to be configured for rac database.If this field is left empty, current storage will be used for rac database. For CFS, this field will have directory path. –>

<n:TargetDatabaseArea>+PAWDB_DATA</n:TargetDatabaseArea>

<!–Specify Flash Recovery Area to be configured for rac database. If this field is left empty, current recovery area of non-rac database will be configured for rac database. If current database is not using recovery Area, the resulting rac database will not have a recovery area. –>

<n:TargetFlashRecoveryArea>+FRA</n:TargetFlashRecoveryArea>

</n:SharedStorage>

</n:Convert>

</n:ConvertToRAC>

</n:RConfig>

– Run rconfig to convert pawdb from single instance database to 2 instance RAC database

[oracle@paw-racnode1 sampleXMLs]$ rconfig convert.xml

Converting Database “pawdb” to Cluster Database. Target Oracle Home: /u01/app/oracle/product/11.2.0/db_1. Database Role: PRIMARY.

Setting Data Files and Control Files

Adding Database Instances

Adding Redo Logs

Enabling threads for all Database Instances

Setting TEMP tablespace

Adding UNDO tablespaces

Adding Trace files

Setting Flash Recovery Area

Updating Oratab

Creating Password file(s)

Configuring Listeners

Configuring related CRS resources

Starting Cluster Database

<?xml version=”1.0″ ?>

<RConfig version=”1.1″ >

<ConvertToRAC>

<Convert>

<Response>

<Result code=”0″ >

Operation Succeeded

</Result>

</Response>

<ReturnValue type=”object”>

<Oracle_Home>

/u01/app/oracle/product/11.2.0/db_1

</Oracle_Home>

<Database type=”ADMIN_MANAGED” >

<InstanceList>

<Instance SID=”pawdb1″ Node=”paw-racnode1″ >

</Instance>

<Instance SID=”pawdb2″ Node=”paw-racnode2″ >

</Instance>

</InstanceList>

</Database> </ReturnValue>

</Convert>

</ConvertToRAC></RConfig>

– Check the latest log file for rconfig while conversion is going on :

[oracle@paw-racnode1 sampleXMLs]$ ls -lrt $ORACLE_BASE/cfgtoollogs/rconfig/*.log

[oracle@paw-racnode1 sampleXMLs]$ tail -f $ORACLE_BASE/cfgtoollogs/rconfig/*.log

– Click on link to see the log generated in my conversion: rconfig_08_11_15_17_56_43

– Note that rconfig adds password file to all the nodes but entry to tnsnames.ora needs to be modified ( We have to mention scan name instead of host name ) on the local node and add the same to the other nodes.

Following is the entry I have modified on the local node and copied to rest of the nodes :

– Original:

PAWDB =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = paw-racnode1.airydba.com)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = pawdb)

)

)

– Modified:

PAWDB =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = paw-rac01-scan.airydba.com)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = pawdb)

)

)

– Add entry in /etc/oratab of paw-racnode1 as :

pawdb1: /u01/app/oracle/product/11.2.0/db_1

– Add entry in /etc/oratab of paw-racnode2 as :

pawdb2: /u01/app/oracle/product/11.2.0/db_1

– Check that the database has been converted successfully and 2 instances (pawdb1,pawdb2) are running on different nodes:

[oracle@paw-racnode1 sampleXMLs]$ srvctl status database -d pawdb

Instance pawdb1 is running on node pawracnode1

Instance pawdb2 is running on node paw-racnode2

[grid@paw-racnode1 sampleXMLs]$ crsctl stat res -t

-------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.DATA.dg ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.FRA.dg ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.LISTENER.lsnr ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.OCR_DG.dg ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.PAWDB_DATA.dg ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.asm ONLINE ONLINE paw-racnode1 Started ONLINE ONLINE paw-racnode2 Started ora.eons ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.gsd OFFLINE OFFLINE paw-racnode1 OFFLINE OFFLINE paw-racnode2 ora.net1.network ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.ons ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 ora.registry.acfs ONLINE ONLINE paw-racnode1 ONLINE ONLINE paw-racnode2 -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE paw-racnode2 ora.LISTENER_SCAN2.lsnr 1 ONLINE ONLINE paw-racnode1 ora.LISTENER_SCAN3.lsnr 1 ONLINE ONLINE paw-racnode1 ora.oc4j 1 OFFLINE OFFLINE ora.orcl.db 1 OFFLINE OFFLINE ora.paw-racnode1.vip 1 ONLINE ONLINE paw-racnode1 ora.paw-racnode2.vip 1 ONLINE ONLINE paw-racnode2 ora.pawdb.db 1 ONLINE ONLINE paw-racnode1 Open 2 ONLINE ONLINE paw-racnode2 Open ora.racdb.db 1 OFFLINE OFFLINE 2 OFFLINE OFFLINE ora.scan1.vip 1 ONLINE ONLINE paw-racnode2 ora.scan2.vip 1 ONLINE ONLINE paw-racnode1 ora.scan3.vip 1 ONLINE ONLINE paw-racnode1

– Check that database can be connected remotely from second node (paw-racnde2 ) and also check that datafiles have been Converted in to ASM:

[grid@paw-racnode2 ~]$ su oracle

Password:

[oracle@paw-racnode2 grid]$ . oraenv

ORACLE_SID = [+ASM2] ? pawdb2

The Oracle base for ORACLE_HOME=/u01/app/oracle/product/11.2.0/db_1 is /u01/app/oracle

[oracle@paw-racnode2 grid]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.1.0 Production on Sun Aug 11 18:31:48 2015

Copyright (c) 1982, 2009, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.1.0 – 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Data Mining and Real Application Testing options

SQL> select name from v$database;

NAME

———

PAWDB

SQL> select name from V$datafile;

NAME

————————————————————————–

+PAWDB_DATA/pawdb/datafile/system.256.930333443

+PAWDB_DATA/pawdb/datafile/sysaux.257.930333527

+PAWDB_DATA/pawdb/datafile/undotbs1.258.930333589

+PAWDB_DATA/pawdb/datafile/users.259.930333597

+PAWDB_DATA/pawdb/datafile/undotbs2.270.930333939

Okay, so we are able to recognize that single instance ( Non ASM ) database pawdb has been successfully converted into RAC database. Hope you enjoyed and learn some thing from my this post. Please do comment on this post if you liked it.

Thank you for reading… This is Airy…Enjoy Learning